In the world of international health, the Expense and Melinda Gates Foundation’s foray into Artificial Intelligence (AI) has actually ended up being a topic of extreme examination. In a current advancement, a trio of academics from the University of Vermont, Oxford University, and the University of Cape Town has provided their insights into the controversial push towards using AI to advance global health.

Revealing the $5 Million Plan

The catalyst for this critique was an announcement in early August, wherein the Gates Foundation exposed a brand-new initiative worth $5 million. The goal was to money 48 tasks tasked with executing AI large language designs (LLM) in low-income and middle-income countries. The objective? To boost the livelihood and wellness of communities on an international scale.

Altruism or Experimentation?

Each time the Structure positions itself as the benefactor of low or middle-income nations, it stimulates skepticism and unease. Observers, critical of the organization and its creator’s evident “rescuer” complex, question the selfless motives behind the many “experiments” carried out.

Leapfrogging Global HEalth Inequalities?

A significant question occurs: Is the Gates Structure trying to “leapfrog worldwide health inequalities”? The scholastic paper authored by researchers delves into this questions, raising issues about the possible repercussions of such endeavors.

Unraveling the AI Dilemma

The research study does not shy away from expressing skepticism. It highlights 3 key reasons the unbridled execution of AI in currently delicate health care systems may do more damage than great.

Biased Data and Artificial Intelligence:

The nature of AI, particularly machine learning, comes under analysis. The scientists stress that feeding prejudiced or low-quality data into a knowing machine could perpetuate and intensify existing predispositions, possibly resulting in negative results.

Structural Bigotry and AI Learning:

Considering the structural racism embedded worldwide’s governing political economy, the paper questions the prospective results of AI learning from a dataset reflective of such systemic biases.

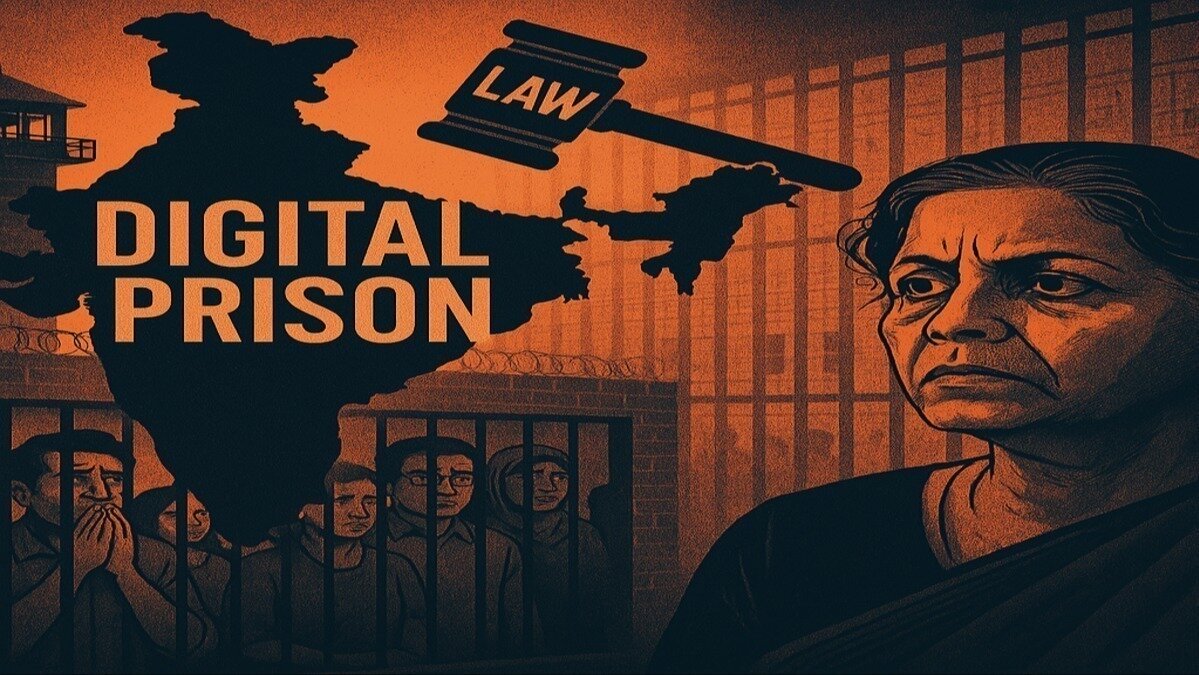

Lack of Democratic Regulation and Control:

A vital issue raised is the absence of genuine, democratic policy and control in the release of AI in worldwide health. This problem extends beyond the instant scope, highlighting more comprehensive obstacles in the regulative landscape.

In conclusion, the Gates Foundation’s AI initiative, while promising positive transformations in worldwide health, is met with skepticism from academics. The prospective mistakes of prejudiced information, systemic concerns, and the lack of robust policy underscore the requirement for a mindful and transparent method in leveraging AI for the improvement of susceptible communities worldwide.